Abstract:

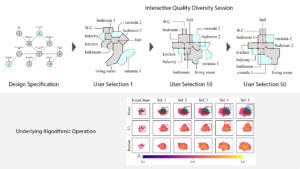

“Computer-aided optimization algorithms in structural engineering have historically focused on the structural performance of generated forms, often resulting in the selection of a single ‘optimal’ solution. However, diversity of generated solutions is desirable when those solutions are shown to a human user to choose from. Quality-Diversity (QD) search is an emerging field of Evolutionary Computation which can automate the exploration of the solution space in engineering problems. QD algorithms, such as MAP-Elites, operate by maintaining and expanding an archive of diverse solutions, optimising for quality in local niches of a multidimensional design space. The generated archive of solutions can help engineers gain a better overview of the solution space, illuminating which designs are possible and their trade-offs. In this paper we apply Quality Diversity search to the problem of designing shell structures. Since the design of shell structures comes with physical constraints, we leverage a constrained optimization variant of the MAP-Elites algorithm, FI-MAP-Elites. We implement our proposed methodology within the Rhino/Grasshopper environment and use the Karamba Finite Element Analysis solver for all structural engineering calculations. We test our method on case studies of parametric models of shell structures that feature varying complexity. Our experiments investigate the algorithm’s ability to illuminate the solution space and generate feasible and high-quality solutions.”

Citation:

Konstantinos Sfikas, Antonios Liapis, Joel Hilmersson, Jeg Dudley, Edoardo Tibuzzi and Georgios N. Yannakakis: “Design Space Exploration of Shell Structures Using Quality Diversity Algorithms,” in Proceedings of the International Association for Shell and Spatial Structures Symposium, 2023.

BibTeX:

@inproceedings{sfikas2023shell,

author={Konstantinos Sfikas and Antonios Liapis and Joel Hilmersson and Jeg Dudley and Edoardo Tibuzzi and Georgios N. Yannakakis},

title={Design Space Exploration of Shell Structures Using Quality Diversity Algorithms},

booktitle={Proceedings of the International Association for Shell and Spatial Structures Symposium},

year={2023},

}